How I deploy OpenShift in a hurry

Like many posts, this one is going to be quick and rough.

- Today is Nov 2, 2023, and the latest release of OpenShift is curently version 4.14.

- If you want a specific version of OpenShift, click here.

My previous work with Openshift

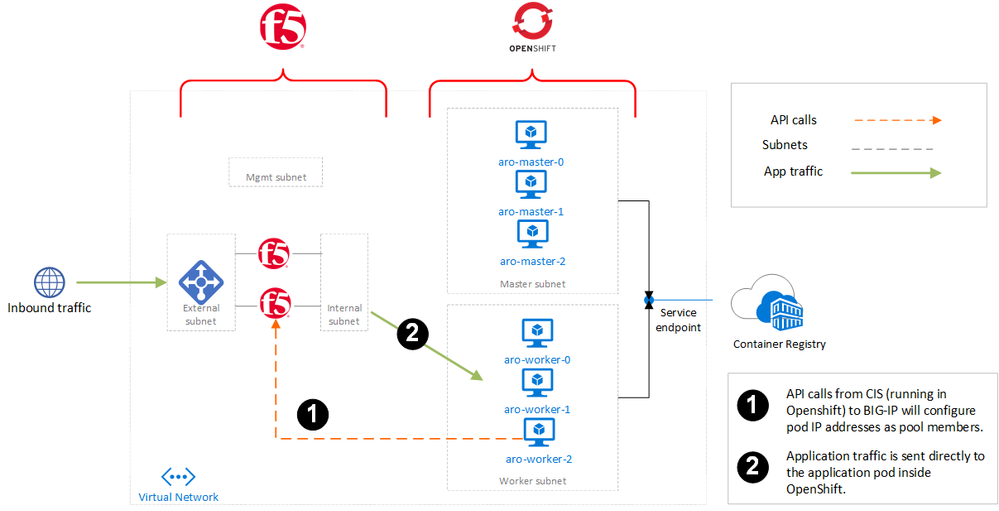

I have needed to dive into Red Hat OpenShift now and then in the course of my work at F5. We’re a great partner of Red Hat and we have many mutual customers.

My most public-facing work so far has been the work I did to automate this architecture when I wrote a nice article about integrating F5 BIG-IP into Azure Red Hat Openshift (ARO).

Article: Running F5 with managed Azure RedHat OpenShift

How I prefer to deploy OpenShift in a hurry

I like a cheaper option than ARO for a lab or demo, and also something that I can power down easily to reduce cost. Here’s instructions for quickly deploying a self-managed OpenShift cluster in AWS.

Pre-requisites

- Create a VPC in AWS

You need at least 4x subnets in 2x Availability Zones.- 2x external subnets with a default route out Internet-Gateway (must be public-facing)

- 2x internal subnets (don’t need to be Internet-facing).

You must enable the

enableDnsSupportandenableDnsHostnamesattributes in your VPC.

-

Register for an account with Red Hat and get access to a pull secret.

-

You will want a public domain name to use. I use

my-f5.com. I have registered this with GoDaddy and I have this set up as a public hosted zone in AWS, and also as a private hosted zone with the same name. The private hosted zone must be linked to the VPC you have created above. - Prepare a file called

install-config.yaml. I have an example here. You can see I have pre-filled most values, but you will need to edit at least the following fields: baseDomain, platform>aws>subnets, pullSecret, and sshKey.

baseDomain: my-f5.com #you will need a domain that is hosted in AWS Route53 here.

platform:

aws:

region: us-east-1 # the AWS region that your VPC is in

subnets:

- subnet-xxxxxxxxxxxxxxxxx # your public subnet id in AZ1

- subnet-xxxxxxxxxxxxxxxxx # your public subnet id in AZ2

- subnet-xxxxxxxxxxxxxxxxx # your private subnet id in AZ1

- subnet-xxxxxxxxxxxxxxxxx # your private subnet id in AZ2

publish: External

pullSecret: 'my-pull-secret' #get your pull secret from https://cloud.redhat.com/openshift/install/pull-secret

sshKey: |

ssh-rsa xxx...yyy imported-openssh-key #use your own key here to allow you to access the ec2 instances created with ssh.- Download two binaries from Red Hat here: https://cloud.redhat.com/openshift/install/aws/user-provisioned.

You can choose to get the installer for Mac OS or Linux, and the CLI can be downloaded for Mac, Linux, or Windows.- Download the installer (UPI – User provisioned Infrastructure)

- Also, you’ll need to download the client CLI from the same URL above. This gets installed on your laptop and you use it to interact with Openshift.

Installation directions

- Follow these instructions for installing OpenShift onto existing VPC in AWS: https://docs.openshift.com/container-platform/4.14/installing/installing_aws/installing-aws-vpc.html#installation-obtaining-installer_installing-aws-vpc

- uncompress the downloaded files

- you will need to create a config file called

install-config.yamlthat will contain details of your deployment. In this example the most important detail to be aware of is the subnet Ids.- I have an example

install-config.yamlfile for you here.

- I have an example

- make sure you create a new directory for every OpenShift cluster you create. In my example, my directory is called

install_ocp

tar -xzvf openshift-install-linux.tar.gz

tar -xzvf openshift-client-linux.tar.gz

mkdir install_ocp

cp install-config.yaml-backup install_ocp/install-config.yaml

./openshift-install create cluster --dir=install_ocp/ --log-level=infoImportant: Do not delete the installation program or the files that the installation program creates. Both are required to delete the cluster.

Access the cluster

- Once your cluster build is complete you will see in the logs that your User Interface is ready.

- the default username is

kubeadminand the password will be in the logs.

- the default username is

- Use the

ocutility or thekubectlcommand line to authenticate and administer your cluster. - you should not need to connect to the ec2 instances via ssh. But if you want to, you should be able to reach them with the username

coreand the private key associated with the public key that you entered into theinstall-config.yamlfile.

Destroy the cluster

Instructions for destroying the cluster are here.

This will not destroy the AWS infrastructure resources (VPC, Route53 domain, etc) so you can clean those up manually.

./openshift-install destroy cluster --dir=install_ocp/ --log-level=info